The Enterprise Playbook for Implementing AI Workers

A Practical Framework for Deploying AI at Scale

Executive Summary

Enterprises that successfully scale AI workers typically follow several guiding principles. They begin with a single, high-impact use case that builds organizational confidence and generates clear, early wins.

While the potential of AI is widely acknowledged, achieving early wins and reliable, large-scale deployment requires more than a powerful model. It demands structured planning, cross-functional alignment, disciplined testing, and a repeatable implementation framework.

With this foundation, organizations often expand rapidly, integrating AI workers into multiple departments and operational ecosystems.

This white paper introduces a practical, four-stage methodology for deploying AI workers in enterprise environments. It outlines the foundational elements of successful workflows, the technical and operational considerations that safeguard performance, and best practices developed through over 150 real-world, enterprise implementations. The goal is to give both business and technical leaders a clear roadmap for building AI-driven workflows that are accurate, resilient, and capable of delivering measurable impact.

Why Enterprises Are Turning to AI Workers

Enterprises today are operating under growing structural pressure. Labor shortages, rising operational costs, and increasingly complex customer and partner interactions are making traditional, people-dependent workflows harder to sustain. At the same time, businesses are expected to grow faster, expand availability, and improve service quality without increasing headcount at the same rate.

Conventional automation approaches struggle in this environment. Rule-based systems lack flexibility, break down under conversational variability, and require constant maintenance as processes evolve. As a result, they often fail to deliver the resilience and adaptability needed to support modern, high-volume operations.

AI workers represent a fundamental shift in how work is scaled. Rather than automating isolated tasks, they interpret intent, manage structured workflows, and take follow-up actions across systems and channels with consistency and speed. Organizations deploy AI workers to absorb repetitive operational load, extend capacity without linear staffing increases, and support 24/7 execution across voice, email, and messaging workflows.

The impact goes beyond cost reduction. AI workers help organizations build more sustainable operating models by reducing dependency on scarce labor, minimizing process bottlenecks, and enabling growth without proportional increases in manual effort. When implemented strategically, they become an operational layer that allows enterprises to scale efficiently, maintain reliability, and focus human teams on higher-value decision-making and exception handling.

The Four-Stage Implementation Framework

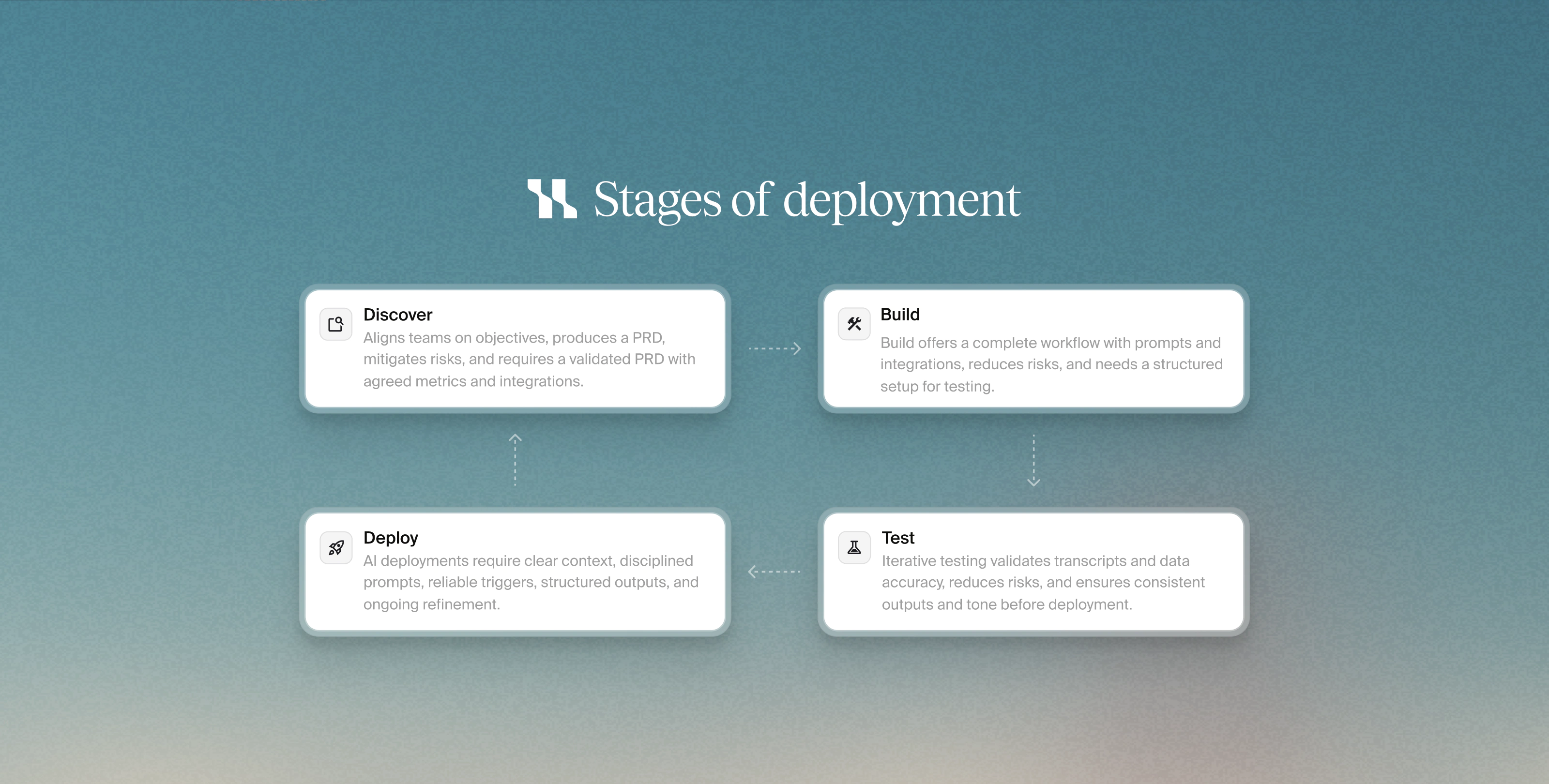

Enterprises achieve the strongest results when AI deployments follow a clear, phased methodology. A structured framework ensures alignment between teams, reduces risk during development, and creates a predictable path from early discovery to full-scale rollout.

Stage 1: Discover

Successful implementations begin with a clear understanding of the business objective and the operational context of the use case. During this stage, teams define what the AI workers must accomplish, the data it will access, how outcomes should be structured, and which scenarios must be supported.

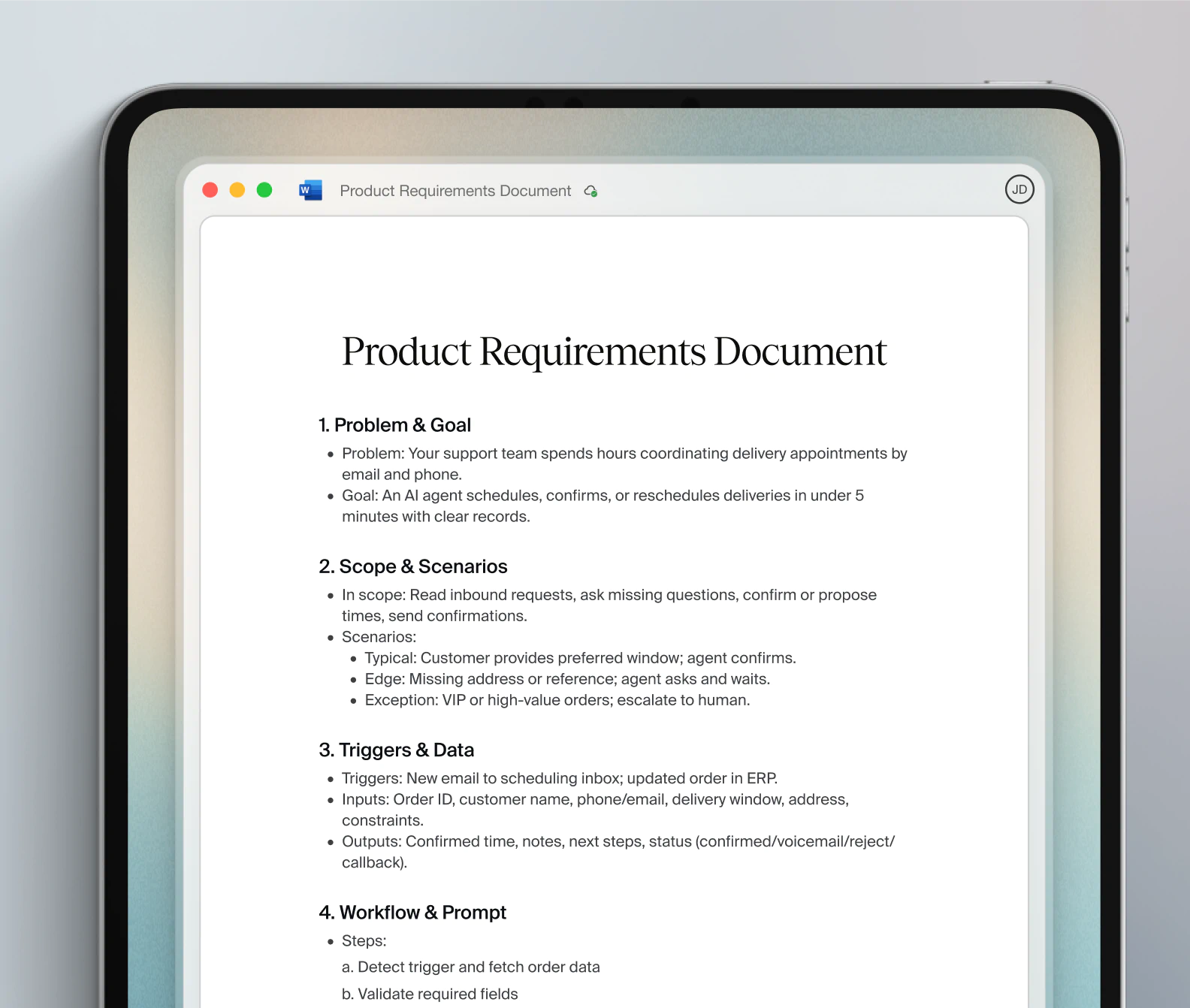

A key output of this phase is the Product Requirements Document (PRD). The PRD captures triggers, required inputs and outputs, integration needs, escalation rules, exception handling, and success metrics. It serves as a shared reference point for business stakeholders, operational leaders, IT teams, and executive sponsors, ensuring alignment before development begins.

Strong discovery reduces uncertainty, minimizes rework, and establishes a stable foundation for the build phase.

Common Risks at This Stage

The primary risk during discovery is incomplete or superficial alignment. If requirements are loosely defined or edge cases are overlooked, teams may proceed with assumptions that later require significant rework. Underestimating integration complexity or failing to agree on success criteria can also introduce delays and instability downstream.

Requirements to Proceed to the Build Stage

To move forward, teams should have a validated PRD, agreement on the problem statement and success metrics, and a shared understanding of triggers, data inputs, outputs, and escalation paths. All required integrations should be identified, even if they are not yet fully implemented.

Stage 2: Build

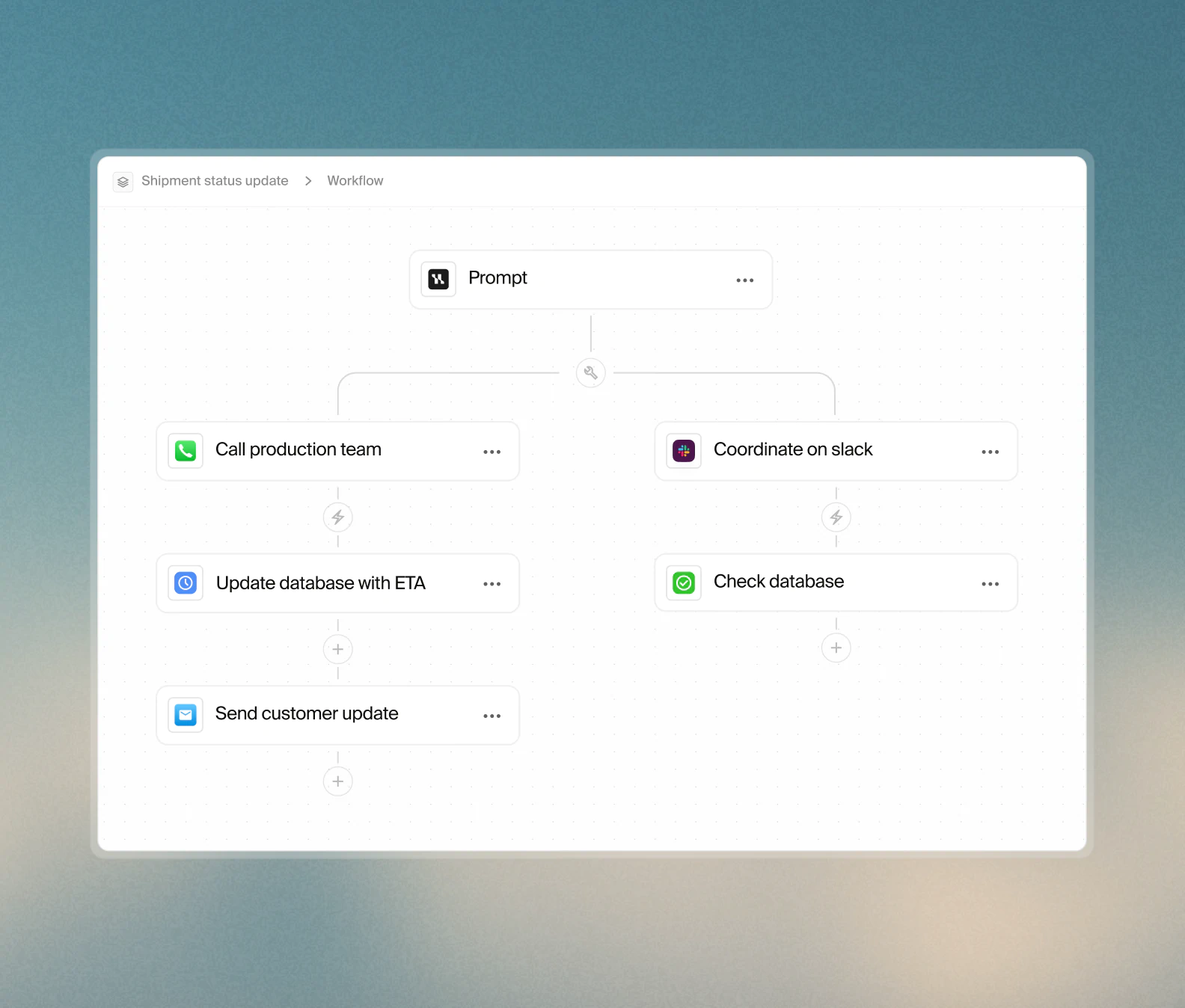

Once requirements are set, development begins on the first version of the workflow. This initial model includes the workflow’s trigger, the AI worker configuration, and supporting logic for data extraction, classification, and operational decision-making.

The AI worker's prompt is the core of this phase. Prompts define the worker's goals, conversational tone, operational steps, and responses to both standard and edge-case scenarios. Strong prompts follow a structured format, remain concise, avoid contradictions, and typically include example scripts to guide the worker toward natural and productive dialogue.

Tooling and integrations are also addressed during this stage. Some workflows operate entirely within the AI platform, while others rely on connected email accounts, APIs, file storage systems, or operational tools. Establishing these pathways early ensures a smooth transition into testing and validation.

Common Risks at This Stage

During the build phase, the most common risks relate to incomplete workflow logic or overly optimistic assumptions about how the AI worker will behave in real-world scenarios. Prompts that are too vague, contradictory, or overly complex can lead to inconsistent behavior. Inadequate error handling or incomplete integration setup can also introduce instability that may not surface until later stages.

Requirements to Proceed to the Test Stage

To advance to testing, the initial workflow should be fully constructed end to end. Prompts should be structured, reviewed, and aligned with the intended operational behavior. All required integrations, tools, and configurations should be in place, even if they are still using test data or sandbox environments. The workflow should be executable and capable of producing structured outputs.

Stage 3: Test

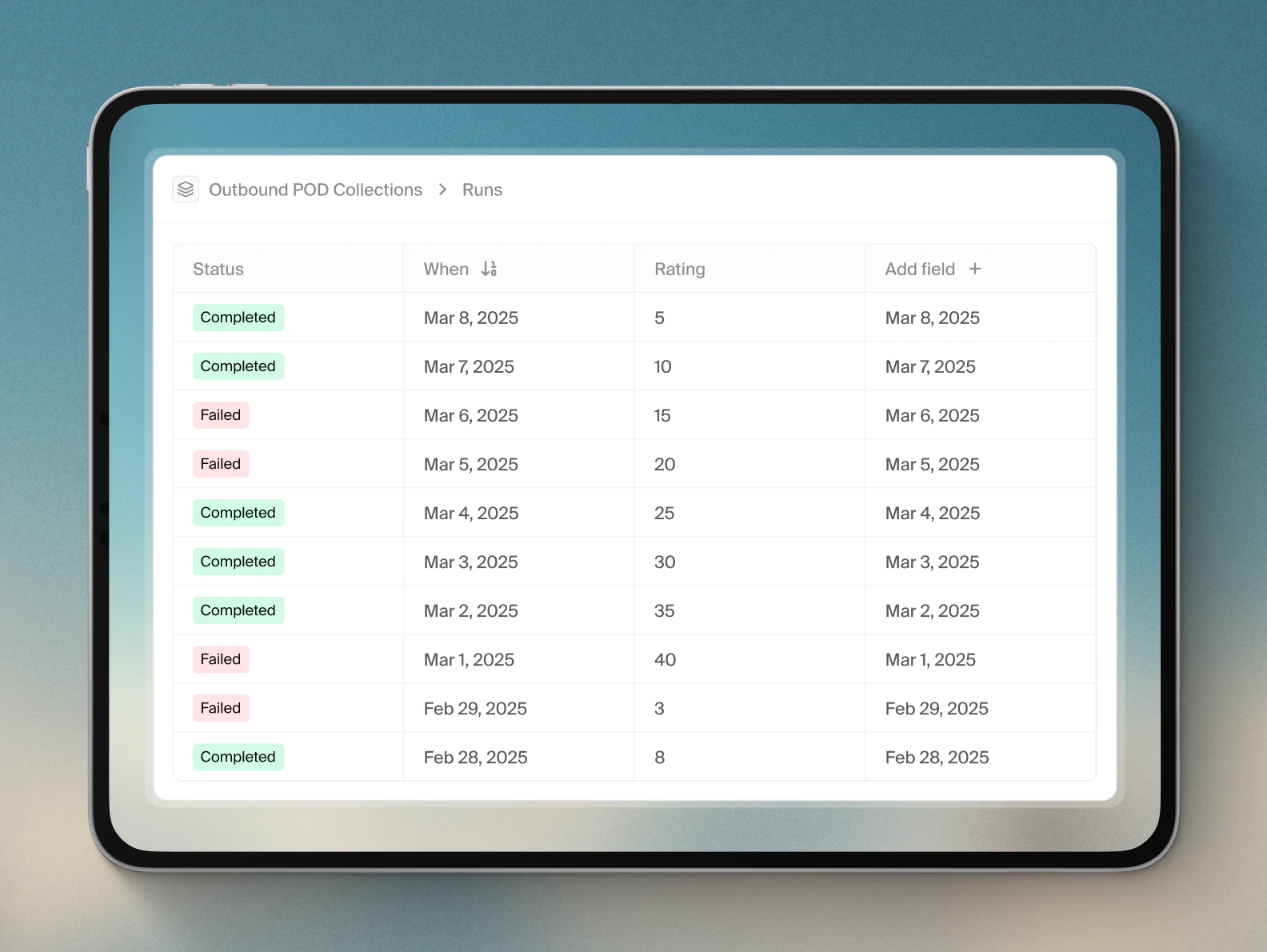

Testing is where workflows evolve from conceptual designs into reliable operational systems. Teams review transcripts, check data extraction accuracy, validate classification logic, and refine the workflow’s handling of both ideal and difficult scenarios.

Validation focuses on the quality of the interaction, whether the worker asks the right questions, maintains the appropriate tone, handles unexpected responses, and captures essential details. Testing is inherently iterative. Updates to prompts, extraction rules, and workflow logic can dramatically improve clarity, accuracy, and consistency.

By the end of this stage, the workflow should demonstrate predictable behavior across diverse inputs, generate structured outputs suitable for reporting or automation, and align fully with the requirements defined during discovery.

Common Risks at This Stage

A key risk during testing is false confidence. Limited test coverage or focusing only on ideal scenarios can mask issues that appear under real-world conditions. Other risks include inaccurate data extraction, misclassification of outcomes, or conversational behavior that does not meet quality or compliance expectations.

Requirements to Proceed to the Deploy Stage

Before deployment, the workflow should demonstrate consistent behavior across a wide range of scenarios, including edge cases. Data extraction and classification outputs should be validated for accuracy, and transcripts should reflect the intended tone and clarity. Any known failure modes should be understood and handled through retries, escalation logic, or monitoring. Stakeholders should agree that the workflow meets the success criteria defined during discovery.

Stage 4: Deploy

After validation, the workflow moves through staged deployment, typically progressing from development to staging and eventually to production. This controlled rollout ensures reliability, prevents disruptions, and allows teams to address any final refinements.

In production, workflows rely on safeguards such as automated retries, real-time monitoring, error handling logic, and reporting pipelines. These features ensure consistent performance, even during high-volume or time-sensitive operations.

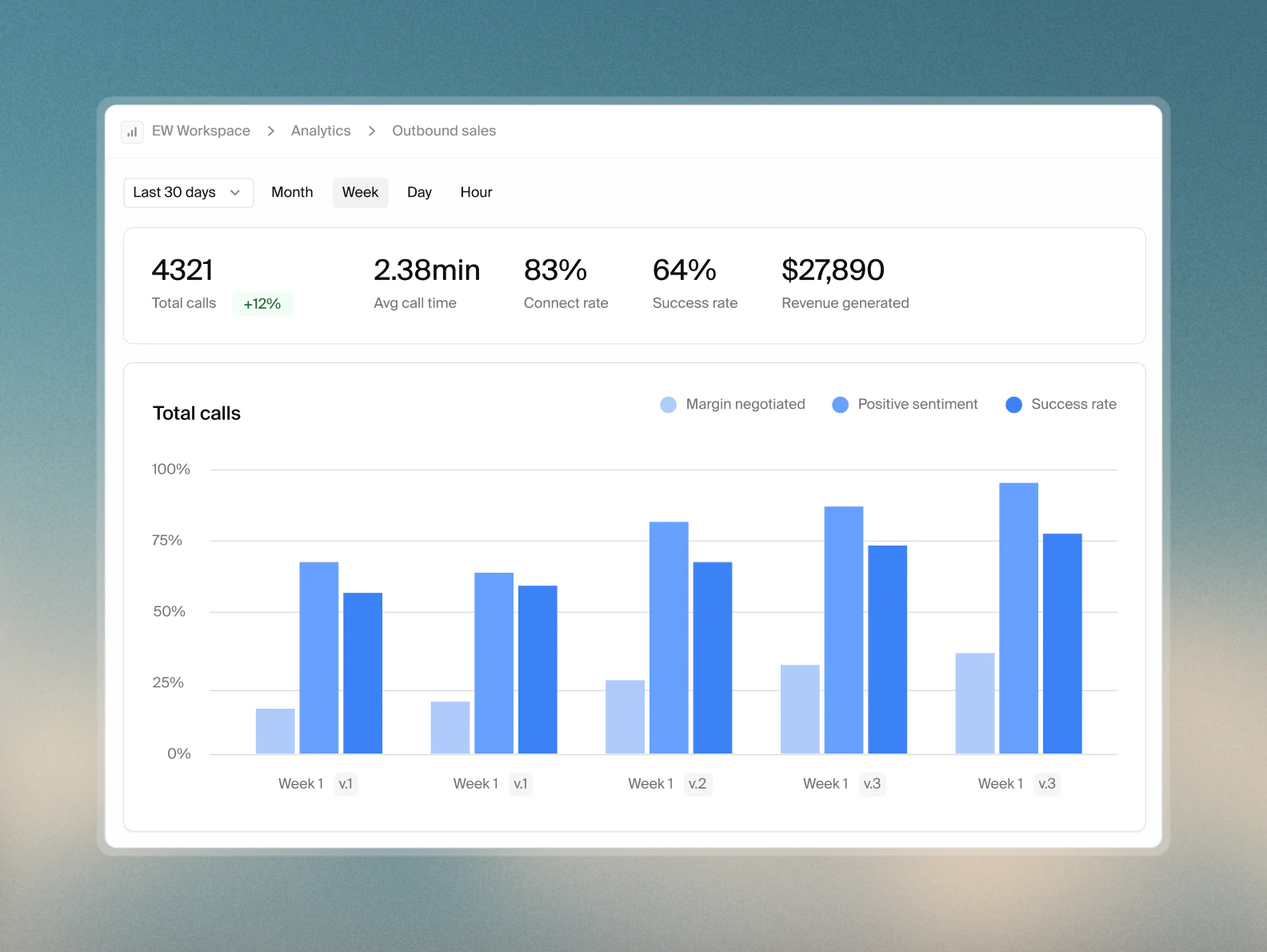

Analytics become essential during rollout and scale, looking at both operational and business-level indicators.

Organizations commonly track completion rates, success rates, failure modes, and average handle times. These metrics provide insight into the workflow’s reliability and efficiency.

Business impact is measured through reductions in manual workload, decreases in processing time, improvements in throughput, and increased ability to handle high-volume interactions in parallel. Experience metrics, such as clarity of communication, consistency, and accuracy in escalation, round out the picture, ensuring the AI worker meets expectations for both quality and performance.

These KPIs offer a comprehensive view of workflow effectiveness and support ongoing optimization.

Best Practices for Scaling AI Across the Enterprise

AI workers represent a meaningful opportunity for operational transformation. With the right playbook, organizations can implement automation that is reliable, extensible, and aligned with long-term strategic goals.

Starting with one clearly defined workflow allows teams to demonstrate early value to get the buy-in required for further development - and it is truly just the start.

Continuing to apply the four-stage implementation framework means both expanding automation to additional workflows and departments and expanding the scope of existing workflows to achieve outcomes not imaginable before the era of the AI workforce.