Technical overview

This article provides a detailed overview of the underlying architecture, integrated AI models, and auditing.

Architecture

HappyRobot is an AI orchestration platform purpose-built for AI workers to function at scale, including real-time voice interaction. This article provides a detailed overview of the underlying architecture, integrated AI models, and AI auditing. Intended to inform technical stakeholders evaluating the platform’s resilience, extensibility, and operational robustness at enterprise scale.

Architecture at a Glance

- Cloud-native, containerized estate. All runtime services are deployed on Kubernetes inside an isolated virtual network.

- Dual edge paths. REST / webhook traffic is fronted by a web application firewall and load balancer; real-time voice enters through a hardened SIP gateway. Both paths terminate TLS and forward only validated traffic to the cluster.

- Stateless vs stateful split. Orchestration, business logic, and realtime media handlers scale out horizontally, while durable artifacts (call recordings, transcripts, analytics) live in managed cloud data stores with built-in replication.

- Observability first. Metrics, logs and traces are aggregated in-cluster and streamed to a central monitoring stack for 24 × 7 SRE coverage.

Model Interoperability

- Pluggable pipeline. Automatic-speech-recognition, language-model and text-to-speech stages are accessed through lightweight adapters, so vendors can be swapped—or self-hosted options inserted—without touching telephony code.

- Vendor diversity. The default stack uses best-in-class commercial engines, but the orchestration layer can route individual tenants (or even individual calls) to alternative endpoints for data-sovereignty or performance reasons.

- Forward compatibility. Upcoming multimodal skills (e.g., image-to-text, document Q&A) register with the same contract, protecting downstream integrations from change.

- Platform-exclusive models. To push latency down and voice quality up, HappyRobot runs a series of proprietary models—such as enhanced TTS, voice-activity / end-of-turn detection, speech-cleanup filters, etc.—directly inside the cluster. These assets are not exposed as standalone APIs; they remain private to our platform, invoked transparently through the same adapter layer.

Telephony Integration

- Standards-based SIP & SRTP. HappyRobot speaks vanilla SIP over TLS for signaling and protects media with SRTP end-to-end, allowing seamless peering with tier-1 carriers, on-prem PBXs, and cloud voice platforms.

- Bring-your-own VoIP provider. Whether traffic arrives from Twilio, Telnyx, Vonage, a regional CLEC, or a direct SIP trunk, the edge gateways normalize signaling so the downstream call flow never changes.

- WebRTC endpoints. In addition to traditional phone networks, every bot can be surfaced as a secure WebRTC stream—ideal for embedding real-time voice inside web pages or mobile apps without plugins.

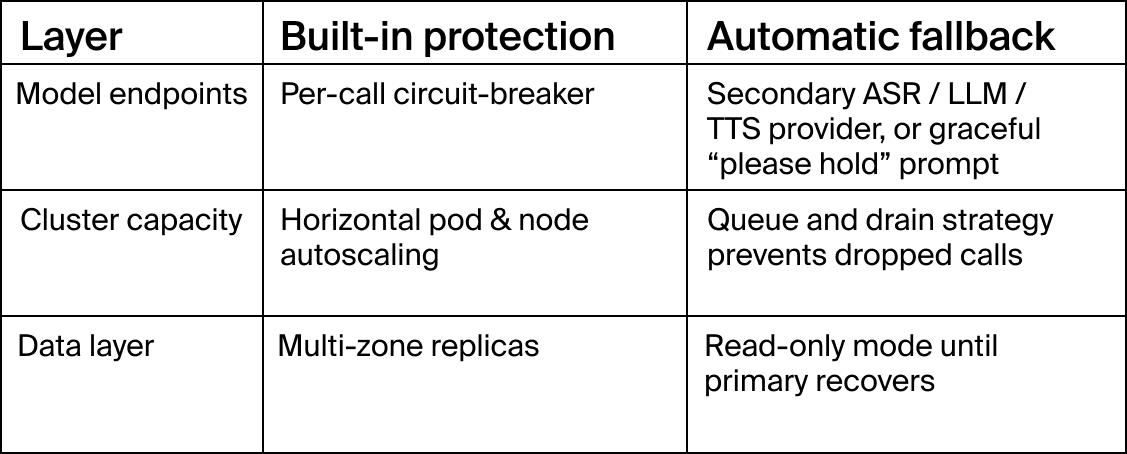

Resilience & Fallbacks

Scalability Envelope

- Voice. GPU-backed nodes are provisioned with headroom for real-time audio workloads. When traffic surges, the autoscaler brings additional capacity online fast enough to keep conversational latency well within an acceptable, human-perceptible range.

- Messaging & APIs. Queue-driven workers expand horizontally as backlog or request rate increases, while HTTP edges scale on demand to preserve low tail-latency for webhook and REST traffic.

- Future headroom. Work is under way to cut provisioning time via pre-warmed images and to stream tokenisation, enabling multi-thousand concurrent calls per region without architectural change.

Security Snapshot

- Encryption everywhere. TLS 1.3 is enforced at all public edges (REST, webhooks, SIP-TLS) and for any outbound calls to external model endpoints.

- Identity & access management. OAuth-based SSO, MFA enforcement, fine-grained RBAC for both users and machine credentials.

- Continuous monitoring. Threat-detection feeds, anomaly alerts and policy audits drive an incident-response workflow aligned to SOC-2 controls.

AI models

A non-extensive list of models used in HappyRobot’s orchestration platform and voice stack - some models are used off the shelf and others have required fine-tuning. We optimize for performance & fine-tune when off-the-shelf doesn’t yield adequate results.

Large Language Model (LLM)

A large language model (LLM) serves as the central reasoning engine that interprets input, makes decisions, and coordinates actions. It ingests structured and unstructured data and uses its understanding of language and context to determine intent, generate responses, and trigger tools. Tools in HappyRobot can be leveraged by the LLM to make an API call, make a call transfer, send a message, or run some custom code.

The LLM acts as the connective layer between different AI components, enabling dynamic, context-aware orchestration without hardcoding every rule.

We regularly evaluate LLM performance based on cost, latency, & quality of responses. We optimize on a per use case or per “AI worker” basis which LLM most effectively & efficiently performs the task.

Text-to-Speech (TTS)

Text-to-speech (TTS) synthesis is the process of transforming written language into spoken words with natural intonation, rhythm, and clarity. It is more complex than simply splitting up words, converting them into speech separately, and then combining them. It requires the underlying TTS model to be able to understand the context of the text, and then generate the speech that matches the context in a way that is natural and human-like.

For instance, a question like “She didn’t go?” requires a rising intonation, while a statement “She didn’t go.” demands a falling contour. Just a simple change in punctuation can require a change in the intonation, and having the ability to deeply understand the context of the text is crucial for generating natural and human-like speech.

Handling this variation consistently is one of several ongoing challenges in TTS - as well as correctly pronouncing complex entities like numbers, managing short or abrupt sentences without sounding clipped, and maintaining fluency during transitions. These are active areas of refinement, and recent trends—like non-autoregressive synthesis—are helping improve speed and stability while preserving expressiveness.

Transcriber

A transcriber is a system—typically powered by automatic speech recognition (ASR)—that converts spoken language into written text. It listens to an audio stream and produces a time-aligned transcript, capturing what was said and often when it was said. Transcribers are foundational in voice interfaces, enabling search, analysis, and downstream processing by language models or analytics engines.

Jargon, accents, side conversations, background noises, etc can cause errors in transcription - this has negative effects to the live conversation as well as downstream impacts in processing and analysis. These challenges are often industry specific and our focus in supply chain focus allows us to fine-tune transcribers to overcome those challenges.

To balance speed and accuracy, we use online transcription to power live interactions and then enhance transcripts offline for improved precision and consistency across analysis and audit workflows.

End-of-turn (EOT)

An End-of-Turn (EOT) model is a machine learning component used in voice-based systems to determine when a speaker has finished their turn in a conversation. It analyzes acoustic cues (like pauses or pitch drops), linguistic patterns, and timing to predict whether the user is done speaking. This allows AI systems to respond promptly without interrupting or creating unnatural gaps. EOT models are critical in real-time applications, where smooth, human-like interaction is essential.

EOT is often overlooked but it’s critical to the user experience and success of deploying AI into the real world. Even as foundation models become faster, knowing when to talk will remain a challenge. We fine-tune EOT models to handle the real-world scenarios of our customers.

Voice Activity Detection (VAD)

Voice Activity Detection (VAD) is a signal processing technique used to identify when speech is present in an audio stream. It distinguishes between voice and non-voice segments, helping systems ignore background noise, silence, or other non-speech sounds. VAD is often the first step in a voice processing pipeline, enabling downstream components—like ASR or EOT models—to activate only when someone is actually speaking.

VAD models still struggle with noisy environments, overlapping speech, and short or hesitant utterances, which can result in missed or false detections. There’s an inherent tradeoff between latency and accuracy - real-time systems need to minimize delay, but faster decisions increase the risk of errors. Combining VAD with transcription models and noise removal improves precision.

Techniques like language-aware filtering and dynamic thresholding offer promising paths forward.

AI Auditing

We take evaluations and communication quality very seriously as our customers trust us with the huge responsibilities of contributing to their customer relationships, handling key business data, & carrying out their operations. Each day, our AI workers are managing thousands of conversations, documents, reading & writing data from databases.

While we also engage in manual auditing, the scale and complexity of monitoring agent behavior manually at scale presents significant challenges. To address this, we have developed an advanced AI-powered auditing system that combines large language models (LLMs), classical ML, and rule-based algorithms. This hybrid approach enables efficient and accurate detection of key issues, ensuring high standards of performance and compliance across all interactions at scale.

Customer impact

Save time on manual monitoring

Instead of needing to monitor every single interaction, our customers can trust that call quality and end-user experience is constantly being monitored and reported on.

Alerting and minimizing time to resolution

We aim to provide transparency and fast resolution for issues seen in production settings for our agents. Our auditing system helps us proactively identify regressions, alerting our engineering teams and customers. The auditing system helps narrow down the failure and minimize the time to resolution.

Multimodal evaluations

In Voice AI systems, measuring quality involves transcripts, system logs, API responses, and importantly the actual voice. Having built our voice stack from the ground up, we pay key attention to that voice experience and audit all modalities of data available for a call.

What we measure

Our primary auditor is our Post-Call Auditor - a system that measures the quality of calls and detects key events/traits for our agents. Each of these is tied to SLAs and outcomes we aim to deliver for our customers. Below is a non-exhaustive selection of the metrics and quality categories our system tracks:

Voice Experience

Key Metrics:

- Interruption Counts: Tracks when AI speaks over customers, indicating poor conversation flow and creating frustrating user experiences.

- Latency: Measures response delay between customer speech and AI reply. Excessive latency breaks conversational rhythm and makes interactions feel unnatural.

- Transcription Accuracy: Measures speech-to-text conversion precision for customer input. Poor transcription leads to misunderstood requests and incorrect responses.

User Engagement and Conversational Flow

Key Metrics:

- Escalation Requests: Tracks when customers ask for human agents, indicating AI limitations or dissatisfaction. High escalation rates suggest the AI isn't meeting customer needs effectively.

- Sentiment Scores: Monitors customer emotional state throughout the call. Declining sentiment alerts to potential issues before they become escalations.

- Turn-Taking Ratios: Monitors balance between AI and customer speaking time. AI domination suggests poor listening; customer domination may indicate confusion.

Agent Autonomy and Human-Computer Collaboration

Key Metrics:

- Human Transfer Rate: Measures frequency of calls requiring human intervention, indicating AI capability limits. High transfer rates suggest training gaps or overly complex customer issues.

- Transfer trigger analysis: Categorizes reasons for human handoff to identify patterns and improvement opportunities. Understanding why transfers happen helps optimize AI training and capabilities.

- Autonomous Resolution Rate: Tracks percentage of issues resolved without human intervention. Higher autonomy reduces operational costs and improves scalability.

- Transfer smoothness score: Evaluates quality of handoff process to human agents. Smooth transfers maintain customer satisfaction and operational efficiency.

Data Accuracy and Tool Execution

Key Metrics:

- Tool Selection Accuracy: Measures choosing the right tool for specific customer needs. Wrong tool selection wastes time and may provide incorrect information.

- Retry Logic Execution: Evaluates proper handling of transient or network errors with external APIs. Good retry logic prevents temporary issues from becoming permanent failures.

- Information Accuracy: Ensures correctness of data retrieved and communicated to customers. Inaccurate information damages trust and can lead to business consequences.

Call Efficiency and Business Outcomes

Key Metrics:

- Call durations: Monitors optimal call length for different types of resolutions. Excessively long calls indicate inefficiency; too short may suggest incomplete resolution.

- Time to reach key actions: Measures speed of achieving primary call objectives like appointments or sales. Faster resolution improves customer satisfaction and operational efficiency.

- Call resolution rate: Measures successful achievement of intended call goals. Low resolution rates indicate AI training gaps or process issues.

- Conversion rates: Tracks success in sales, appointments, or desired customer actions. Directly ties AI performance to business outcomes and revenue generation.

Next in AI auditing - who audits the auditor

A growing trend in AI evaluation systems, especially those that incorporate Large Language Models, is the notion of “Who validates the validators?” (see academic papers focused on this topic). And its an important question - how do we know our AI auditor is correctly catching the regressions in our AI agents?

Furthermore, for an auditor to be truly helpful, it must demonstrate both high recall (identifying all the cases where there were regressions) and high precision (alerting for only those cases where there were indeed regressions), meaning that our AI auditing system must have a high F-score. To ensure this is true, we measure how much our AI auditor agrees with human auditing across different types of voice interactions.